The Artist Against the Machine: Revisited

Have My Opinions on AI Changed?

The Artist Against the Machine: Revisited

Barely over a year ago, I posted my first essay to get attention outside my circle of friends and family:

It was an impassioned criticism of using AI to generate text in one’s creative writing. My argument centred around my fear of losing my artistic integrity (even my moral integrity) if I began to rely upon AI for my prose, story, structure, editing, and most importantly, voice:

If I used Generative AI to write my stories, my essays; even if I used it only as an editing tool - could I maintain my artistic integrity?

No.

I kept returning to no. Every time I asked that question. Who knows where the path will lead. Today, I may get it to rephrase my sentences; tomorrow, it may be generating whole articles using my voice.

I would be an artistic fraud. A cheat.

Not only would I be cheating others - my readers; I would be cheating my own humanity. I would be cheating my own journey, my own dream; of developing my craft, of developing my own unique take on the world, using the gifts given to me by God.

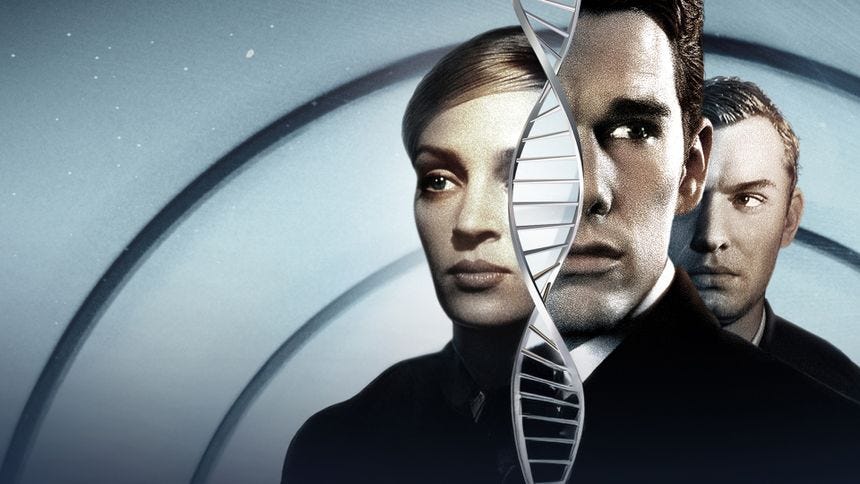

I used the science fiction film Gattaca as a metaphor for continuing to write as humans have done for millennia, instead of submitting to the artificial generator that is the Large Language Model. In the film, citizens have been divided into the genetically enhanced and the merely human - or ‘God-children’. The protagonist Vincent, when asked by his superior brother how he beat him in game of chicken swimming out as far as they could into the sea, replied that he didn’t save energy for the swim back - the implication being that mindset and willpower matters more than biological advantages or systematic favour.

I drew the link to writers: To try to become a writer has always been an idiotic pursuit, but today one needs a healthy amount of insanity to believe they can succeed (note the intentional use of the word ‘healthy’). Not only has a machine been created that - purportedly - can do our job for us, but 1) if you are of a certain political persuasion and sex, editors dump your submissions in their rainbow covered rubbish bins, and 2) much of the population has stopped reading books and especially fiction, shrinking your consumer base.

I concluded that against this bulwark, the only way one can succeed is if you don’t save any energy for the swim back. You can never give up. I think Bukowski summed up the mindset needed for artists who are forgoing mechanical puppeteering almost perfectly:

If you're going to try, go all the way. Otherwise, don't even start. This could mean losing girlfriends, wives, relatives and maybe even your mind. It could mean not eating for three or four days. It could mean freezing on a park bench. It could mean jail. It could mean derision. It could mean mockery--isolation. Isolation is the gift. All the others are a test of your endurance, of how much you really want to do it. And, you'll do it, despite rejection and the worst odds. And it will be better than anything else you can imagine. If you're going to try, go all the way. There is no other feeling like that. You will be alone with the gods, and the nights will flame with fire. You will ride life straight to perfect laughter. It's the only good fight there is.

It is okay if I am not read and die in obscurity because I know my nights flamed with fire - I never gave up. I also know, in contrast to Bukowski, that there are more important things for me than my writing. Life itself is a gift, and the people close to me in it. That’s more than enough.

But have my opinions on AI changed at all?

Are There Uses For AI?

Despite my general distaste of what I believe is the human derangement by the way of technology use, that includes everything from porn addiction, reliance on chemicals (like skin cream), video games, sedentary lifestyles, obviously phones and much else, I like to think I am not a Neo-luddite. Some technology is awesome. I do all my writing on a computer. I create electronic music. I DJ. I am obsessed with skiing and use incredibly advanced ski technology - and there’s more. If used intelligently with discipline, maintaining your ability to say no to most of it, technology can be a boon. So what about AI?

My fundamental stance hasn’t changed: If you use Generative AI you are cheating yourself. Your writing ability is an organ in your body. You might be genetically predisposed for writing - the organ might be large to begin with and/or positively sensitive to training. Yet it is no different than any other muscle - if you do not train it properly, in all the ways one can train, then it will not grow, become flexible, and specific to you as individual on this Earth.

One might say, “Hey - it helps me write, it gives me an idea when I’m stuck, or it helps me restructure my story so it makes more sense.” Training with an aid can be useful if you’re injured, or new to such a practice regime, but you never want to rely on the aid - training with an aid is not the same as training. If you have a big cycle race coming up but you’ve been training with an e-bike, do you think when the time comes you are going to match your opponents who have been training properly?

Some people suggest AI can be used for editing - I would warn against doing so. Editing is an essential part of the writing process, and a skill unto itself. Overtime, you begin to develop an idiosyncratic taste for what you believe should stay or should go, and how things should flow from one contextual element to another, to even the order of chapters. That idiosyncratic tendency of yours is fundamental, I believe, to making a work of fiction or creative non-fiction your own. Some of my most cherished works from other authors are those I read and go, “why did he keep this nonsense in, or why didn’t he put this chapter before that?” It was these decisions, seen by some as wrong, which allowed the author’s personality and identity to shine through.

I have heard some people use it as a form of writing group, for feedback, etc. I also could imagine it being used to ascertain whether one’s work fits or breaks the tropes of a certain genre, and therefore will/will not be satisfying audience expectations. If you have used it in this manner let me know in the comments, I am intrigued.

The only way I have ever used AI is for research. AI has the ability to generate answers about almost anything, much faster and more efficiently than one can find with search engines. Of course, the answers need be analysed critically, but there is much information that does not meet that threshold (or those that aren’t socially and/or politically controversial), like minor factual questions that you simply don’t know. To criticise someone for ‘using AI in your writing’ when using it in this manner seems bizarre to me.

I Don’t Care if You Use It

The biggest shift in my opinion (or really, only) - is that I do not care whether other people use it, or even lie about using it. Nothing was fair to begin with, here is another example. Getting enraged about it is pointless. I just know I could never look myself in the mirror if I used Generative AI. It’s pretty simple.

While the dopamine from receiving a like or a comment or a new subscriber on Substack is nice, it’s also trivial, mildly pathetic, and temporary. My self-worth, beside from close friends and family, is not based on the opinions of other people. It is based on upon whether I am thinking, feeling, and behaving in concordance with my values. And one of the most important values is my hate of deception.

While deception is natural and necessary, and likely healthy in small doses, our world has an overflow of deception. It is why we have fallen so far, I believe. I spent much of my late adolescence routing out my inner tendency to lie. Now in my daily actions - although I know I have blind spots like anyone - I try as hard as I can not to deceive, even at cost to myself. It is why I have almost got cancelled due to this Substack - because I tried not to deceive you, my reader.

But it is far more essential that I do not deceive myself. If I completed a cycle race on an e-bike and pretended I was using a normal bike, I wouldn’t fear the mocking laughter from others anywhere near as much as I would fear it from myself. That homunculus in my mind would call me a weak little pussy, and much else, but worst of all he would me a liar.

And I would rather fail at my writing, knowing I tried to succeed honestly, nobly, than to succeed by relying on Large Language Models. Even as I wrote that line, a mocking grin tore across my face at the image of me receiving accolades from around the globe when I know it was all based on bullshit.

Nah, bro. I don’t want that.

I’m only sharing this because you might feel the same, or you might be in the same moral quandary about your writing integrity (or your training), and are unsure which way to turn. If using AI causes your no moral or artistic dilemma, that’s great for you. But if it does, consider my words, because every time you use it you might be selling your soul, little by little.

Thank you all for reading.

If you enjoyed this, please consider giving it a like, or if you have some thoughts, share them in the comments.

Even better, click this button…

If you want to support me further, and help me realise my dream of becoming an independent writer and voice, you can upgrade to a paid subscription or you can simply give me a tip.

Chur, and have a good day and night,

The Delinquent Academic

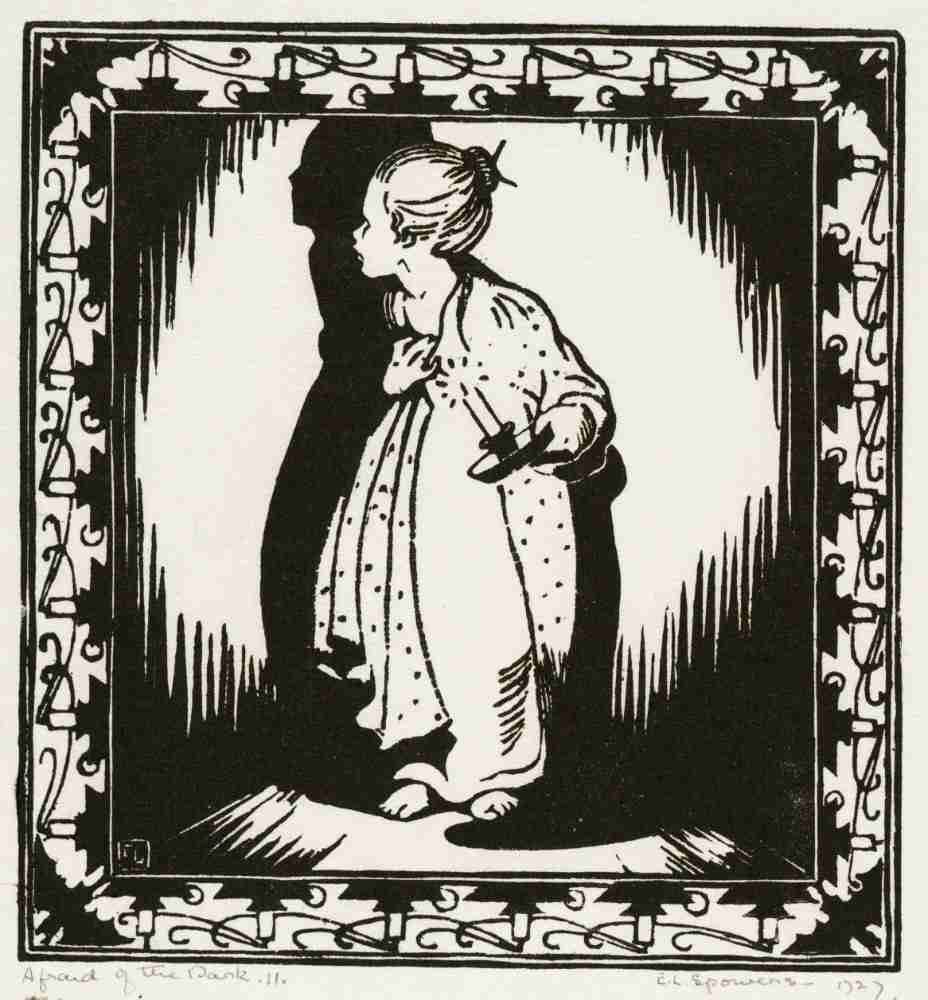

This whole AI thing has been really weighing on my emotionally the last couple of months. I have this crazy feeling of loss, like something has broken and won't be put back. I have been half-hoping that a solar flare would set us back 50 or 60 years, just to give me more time—before what, I don't know.

I am hoping now, like el gato malo says in his piece linked below, that the rise of AI and the knowledge that almost anything could be fake, could be a scam, may force us to lose "trust in anything out of immediate sensory sphere" and thus ruin "long distance trust" and globalization thereby forcing us to return to real life and the real world.

https://boriquagato.substack.com/p/will-ai-shrink-the-world?selection=1568c0cd-2c6b-4f6a-bd27-adf1499cc285

As with anything, AI/LLMs are ultimately tools developed for human use. And as with any tools, they can be misused and abused, leading to them becoming detrimental and potentially harmful. We have seen some of the results of that in creative fields.

My stance on AI is largely in line with your own. I don't care for it. I don't want it. I won't use it, at least not to my active knowledge. My reasons for this are many, ranging from a lack of reliability on the research side–you mentioned the potential usefulness of such models for answering simple questions quickly, but I've seen many heaping double-handfuls of instances where the answers to even simple questions presented by these LLMs are flat out wrong–to the one we most closely align on, the question of personal integrity.

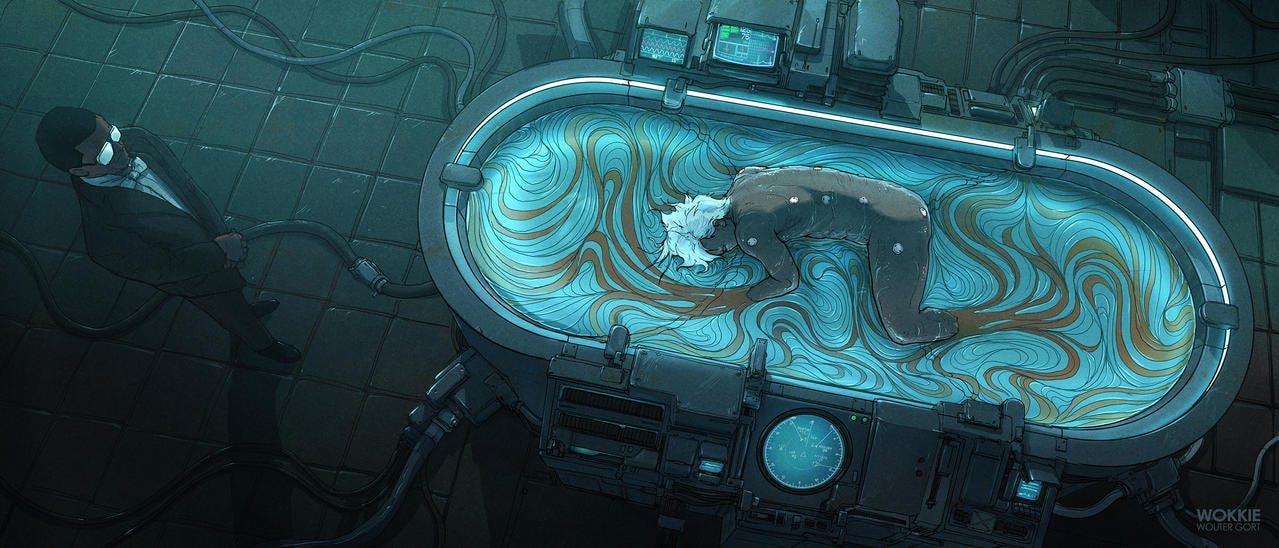

I think where we differ greatest is that my distaste for it does extend to others. When I see obvious signs that others have used AI in their work, I'm inclined to avoid it. It's not a 100% thing, there are exceptions, some of whom use this very platform. Given the creative background I have, though, a pang of disgust inevitably forms in my stomach when I see writers turn to AI for accompanying artwork, or illustrators turn to it for blurbs of text.

Ultimately, the tool is here to stay. I don't think we're going to get rid of it, so I'll instead hope that we can refine it into something that's more positive than what it is.

I'll hope for that, but I won't, and don't, expect it.